https://arxiv.org/abs/2309.03409

Note

just ask gpt to improve current best prompt with some examples, and iterate ( I think extracting examples with bad performance will improve this method, so I add this part to the demo code in the end )

Optimization by PROmpting (OPRO)

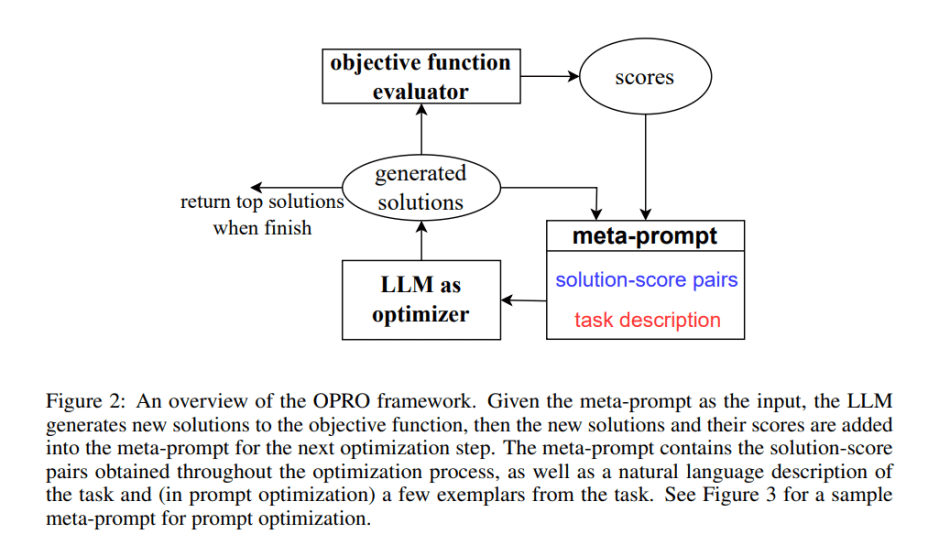

the researchers guide the optimization process by instructing the LLM to iteratively generate new solutions based on natural language descriptions and previously discovered solutions.

To provide an overview of the OPRO framework, a meta-prompt is employed, containing both the description of the optimization problem and previously evaluated solutions. This meta-prompt serves as input, empowering the LLM to generate candidate solutions based on the provided information. Subsequently, these newly generated solutions are assessed and integrated into the meta-prompt for subsequent optimization iterations. This iterative optimization process persists until the LLM can no longer propose solutions with higher scores or reaches the maximum number of optimization steps. In essence, the ultimate objective is to formulate a prompt that maximizes task accuracy.

import openai

# Initialize OpenAI API

openai.api_key = 'YOUR_OPENAI_API_KEY'

def evaluate_prompt(prompt, dataset):

"""

Evaluate the effectiveness of a prompt using a given dataset.

Returns a tuple of accuracy score and a list of poorly performed examples.

"""

score = 0

poor_performers = []

for data in dataset:

input_data = data['input']

expected_output = data['output']

response = openai.Completion.create(

model="gpt-4.0",

prompt=prompt + input_data,

max_tokens=50 # adjust as necessary

)

generated_output = response.choices[0].text.strip()

if generated_output == expected_output:

score += 1

else:

poor_performers.append(data)

accuracy = score / len(dataset)

return accuracy, poor_performers

def optimize_prompt(initial_prompt, dataset, max_poor_examples=5):

"""

Optimize a prompt using the OPRO framework, focusing on poor-performing examples.

"""

best_prompt = initial_prompt

best_score, poor_performers = evaluate_prompt(initial_prompt, dataset)

for iteration in range(NUM_ITERATIONS):

# Select a subset of poorly performed examples to focus on

focus_examples = poor_performers[:max_poor_examples]

meta_prompt = """

Improve the following prompt: '{}'

Here are some examples where the current prompt struggles:

{}

""".format(best_prompt, "\n".join([item['input'] + " -> " + item['output'] for item in focus_examples]))

response = openai.Completion.create(

model="gpt-4.0-turbo",

prompt=meta_prompt,

max_tokens=200 # adjust as necessary

)

new_prompt = response.choices[0].text.strip()

new_score, new_poor_performers = evaluate_prompt(new_prompt, dataset)

if new_score > best_score:

best_score = new_score

best_prompt = new_prompt

poor_performers = new_poor_performers

return best_prompt

# Example usage

dataset = [

{'input': "Translate 'hello' to Spanish.", 'output': "hola"},

{'input': "Translate 'goodbye' to Spanish.", 'output': "adiós"}

# ... add more examples as necessary

]

initial_prompt = "Translate the following English word to Spanish: "

optimized_prompt = optimize_prompt(initial_prompt, dataset)

print(f"Optimized Prompt: {optimized_prompt}")