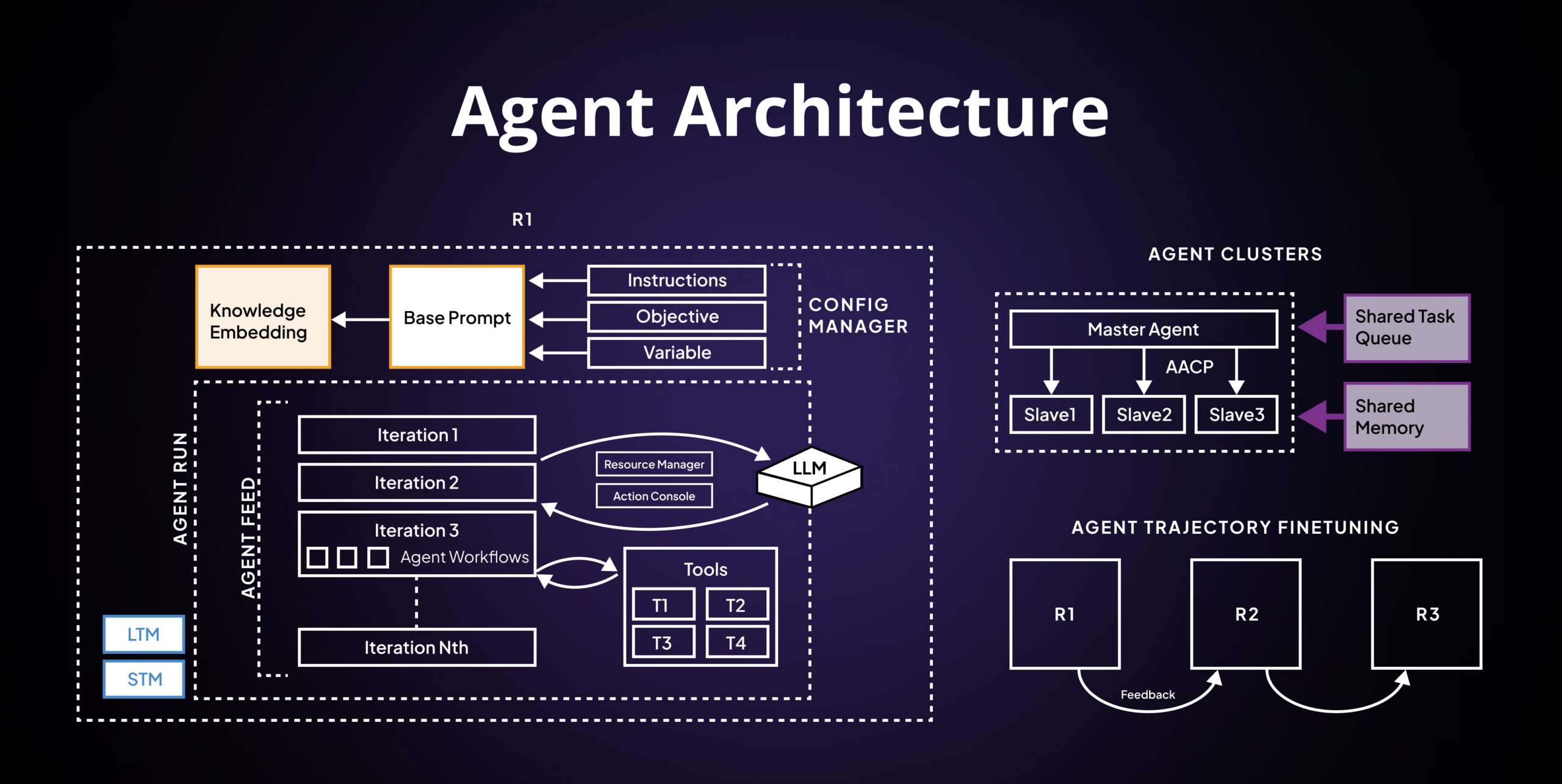

1. Knowledge Initialization:

-

Knowledge Embedding: The initial foundation for our LLM. Before the model processes tasks or queries, it’s equipped with a vast amount of knowledge sourced from books, websites, and other textual data. This pre-trained knowledge helps the LLM understand context, make predictions, and provide relevant answers.

-

Base Prompt: This serves as the initial instruction or query for the LLM. It could be a question, a statement, or a task, guiding the LLM on what to focus on.

2. Iterative Learning and Task Processing:

The distinct iterations represent the agent’s (in this case, LLM’s) ability to handle tasks sequentially, refining its responses based on feedback or new data.

- Agent Workflows: These are the sequences of operations the LLM undergoes in each iteration. They might involve interpreting the input, searching its knowledge base, predicting the best response, and then outputting that response.

3. LLM at the Core:

-

LLM (Large Language Model): Acting as the brain of this architecture, the LLM processes inputs, understands context, and generates outputs. Its large size implies it can handle a vast array of topics and produce coherent, contextually relevant responses.

-

Resource Manager and Action Console: These components likely assist the LLM in task management. While the LLM predicts responses, the Resource Manager might allocate necessary computational resources, and the Action Console could be where actions are finalized and executed.

4. Tools and Extensions:

The diagram showcases various tools, labeled from T1 to T4. These could be external plugins or modules that the LLM can utilize. Imagine the LLM needing to perform a mathematical calculation, translate a language, or access a specific database — these tools can aid in such specialized tasks.

5. Agent Clusters:

-

Shared Task Queue & Shared Memory: These components hint at a distributed system. Tasks might be queued up and handled by different agent instances (like Master Agent, Slave1, Slave2, etc.). Shared Memory allows all these agents to access common data, ensuring consistency and efficiency.

-

AACP: AACP(Agent to Agent Communication Protocol) is a protocol or interface allowing smooth communication between the agents.

6. Agent Trajectory Finetuning:

The “R1, R2, R3” boxes along with feedback loops suggest a reinforcement learning setup. The LLM could be trained further based on feedback from its outputs, refining its predictions and enhancing accuracy over time.